Hello future employers! This is an example of me being a technically engaged member of the profession, in that I spent my week where I was contractually forbidden from having fun during off my off hours (1) working on a little side project.

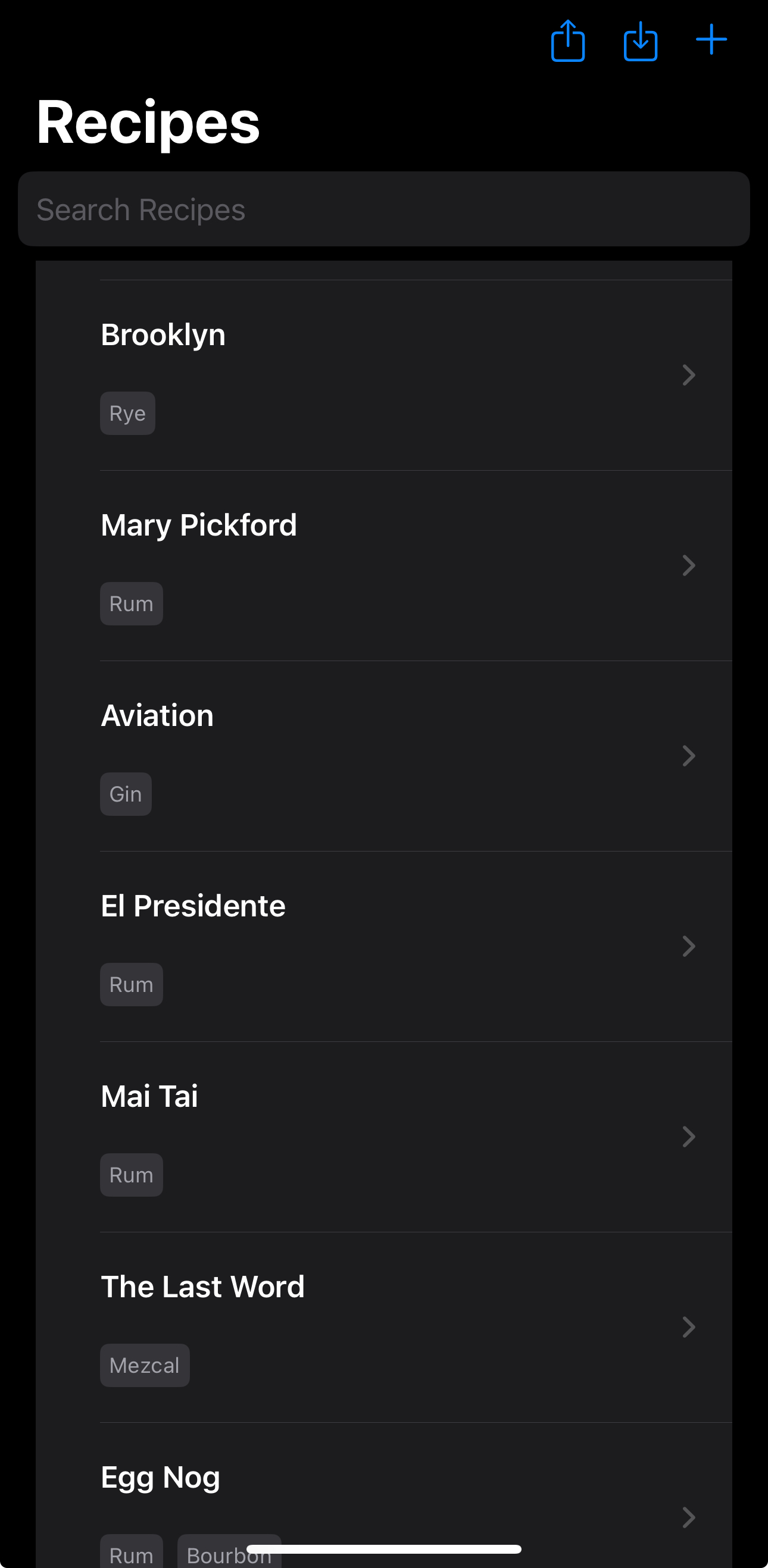

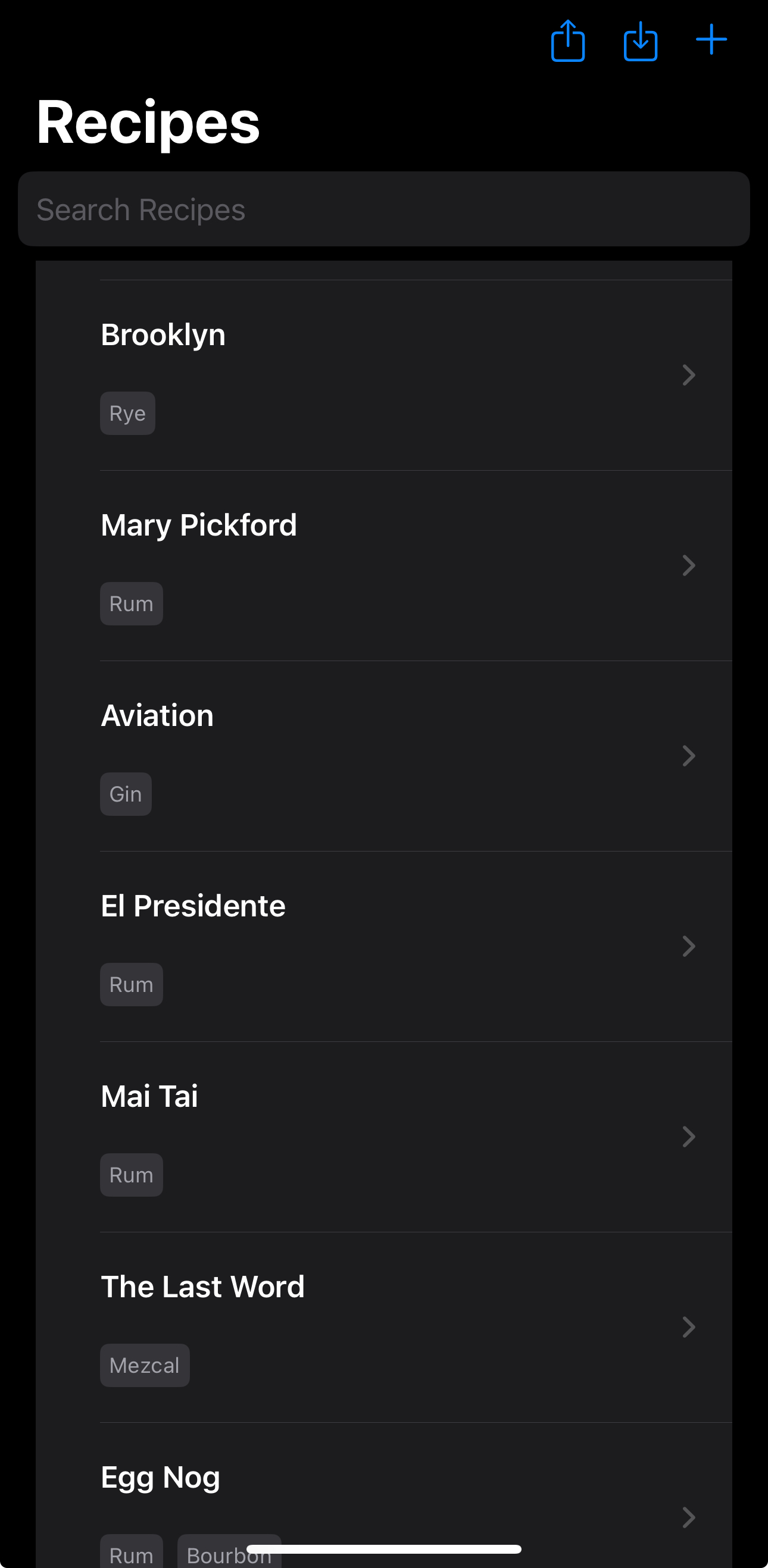

I love and have used for years a little app called “Highball” for cocktail recipes. It’s very pretty, very simple, and gets the job done! But one thing is annoying about it, and one thing is concerning about it:

- Annoying: It’s hard to transfer recipes from one person to another, or one device to another

- Concerning: It’s a side project from the Studio Neat product design company, and is a companion app to some cocktail products they no longer make, so it’s unreasonable to expect that the app will continue to survive future iOS updates

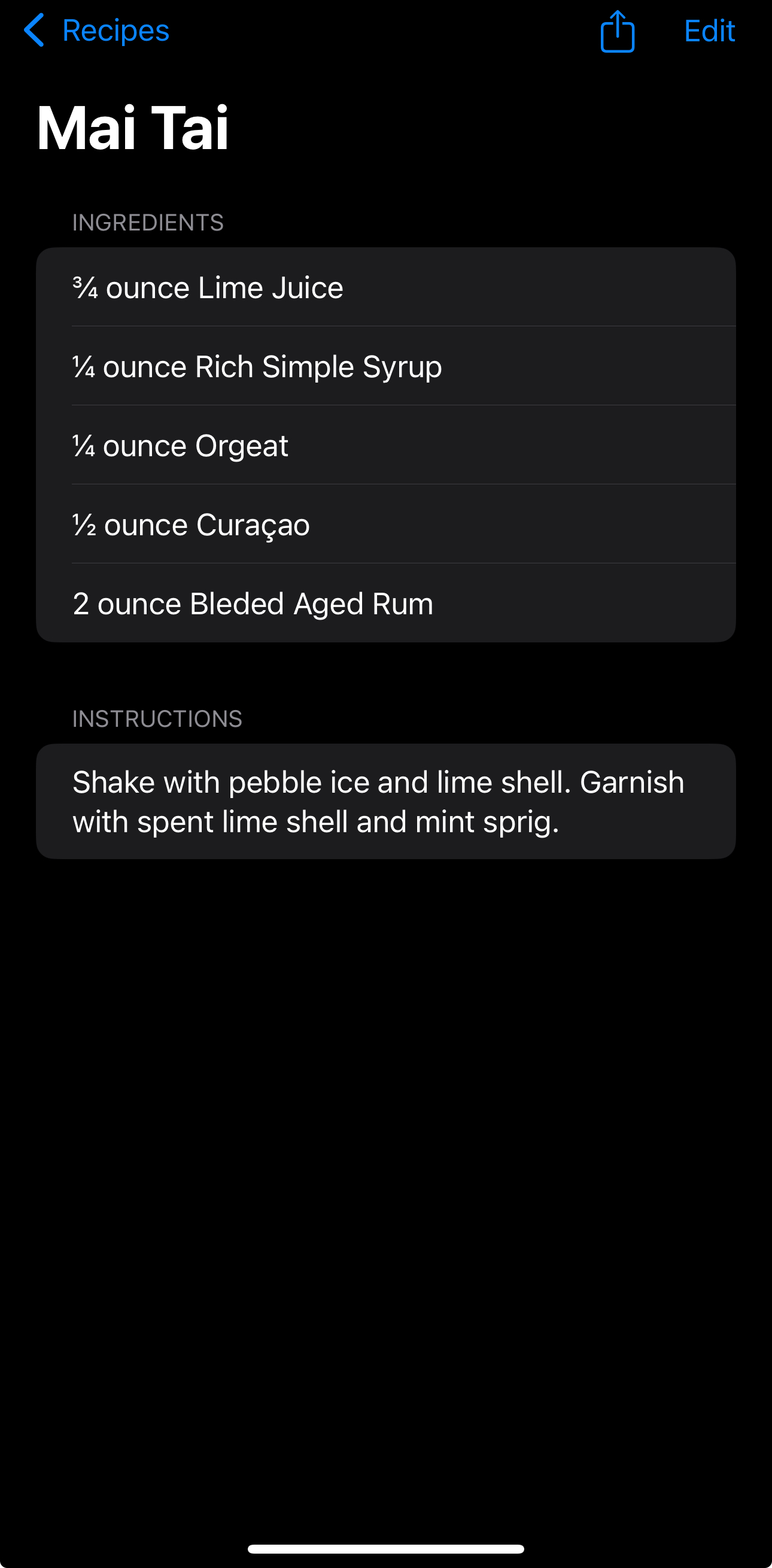

And, I kinda wanted to learn how to make iOS apps. And so I put together a more-or-less functional proof of concept of a cocktail app that is mostly just a worse version of Highball, but with one tool where you can take a photo of a recipe and it’ll use ~~~~~AI~~~~~ to convert the text in the photo and import it into the app (and some extra abilities to export and import recipes.)

It basically works, which is surprising imo, given I probably spent…. let’s say 20 hours total on it, having never written an iOS app before?

But anyway, for the SEO gods when a future prospective employer searches “Andrew Whipple developer”, here’s some stuff I learned.

Swift

I think I generally like writing Swift code, in a way that suggests I’d probably like writing Typescript. At the most basic level, while I’m very hardcoded into my Python developer brain, I like silly things like brackets, I like let and var, I like it being a typed language but having type inference. None of it is stuff that would make me switch or anything, but it’s generally intuitive and enjoyable. On the flip side, I like snake_case more than camelCase, so Python I have not abandoned you!

But overall, I like Python for its readability and its (by convention) explicitness, so I enjoy the parts of Swift that are readable and (by convention or by language design) explicit.

I really like Swift closure syntax, these make a ton of sense to me.

recipe.ingredients.contains { ingredient in

ingredient.name.localizedCaseInsensitiveContains(searchText)

}

// as opposed a more python-y way like

recipe.ingredients.contains(lambda ingredient: ingredient.name.localizedCaseInsensitiveContains(searchText))

// yes i know this is me mixing Swift and Python syntax

I theoretically like required named parameters, and the Apple-y way of using parameter names to construct pseudo-sentences out of function calls

// Read this out loud and it more or less is a sentence!

try? jsonString.write(to: tempURL, atomically: true, encoding: .utf8)

“some” still breaks my brain. I get it intellectually, but not at all intuitively.

var body: some View {}

I don’t like do/catch, I much prefer Python’s try/except

do {

try <a thing that throws>

} catch {

//handle exception

}

//vs

try {

<a thing that throws>

} except {

//handle exception

}

And Swift still has the same color of functions thing that Python does, but obviously async/await fits well with my Python brain. .task/Task() though is easier for me to latch onto than the various asyncio.gather() et al that I have to google every time I ever need to use it.

Also, decoder/encoder are way more confusing to me than the python equivalent json handlers; part of this is I’m sure just unfamiliarity, but also my kingdom for whoever made (or will make) the Swift equivalent of Pydantic for automatic data validation and conversion.

SwiftUI (+ iOS)

My brain does not fundamentally get declarative code (yet.) I can tell because I have a View for a recipe card that currently looks like this:

import SwiftUI

struct CardView: View {

let recipe: Recipe

private func ingredientChip(_ ingredient: String) -> some View {

Text(ingredient)

.padding(5)

.foregroundColor(.secondary)

.background(Color.secondary.opacity(0.2))

.cornerRadius(5)

}

private func getIngredientChips(ingredients: [Ingredient]) -> some View {

let possibleIngredients = ["Rum", "Vodka", "Gin", "Tequila", "Mezcal", "Whisky", "Whiskey", "Scotch", "Rye", "Bourbon"]

var presentIngredients = [String]()

for ingredient in possibleIngredients {

let filteredIngredients = ingredients.filter { $0.name.localizedCaseInsensitiveContains(ingredient)}

if !filteredIngredients.isEmpty {

presentIngredients.append(ingredient)

}

}

let result = HStack {

ForEach(presentIngredients, id: \.self) { ingredient in

ingredientChip(ingredient)

}

}

return result

}

var body: some View {

VStack(alignment: .leading) {

Text(recipe.name).font(.headline)

Spacer()

getIngredientChips(ingredients: recipe.ingredients) // this is bad

.font(.caption)

}

.padding()

}

}

And I know that I’m chucking in this random imperative bit with getIngredientChips(), and I’m sure there’s a declarative way to do this, but this is just where my brain goes first. To get from zero -> working, I think in imperative, and declarative comes later (if at all.)

Related to this, I am happy that this project has shown I have enough basic programming knowledge to be able to sense when it feels like I’m doing things the wrong way. For instance, I’d been trying to have the LLM Model loaded on app startup in the background, but accessed several views deep in the screen for editing an individual recipe. What works is passing it down from the App -> RecipesView -> DetailView -> DetailEditView, but that feels gross, so I strongly believe there’s a better way to do it (and did figure out a way to do it with @EnvironmentObject; maybe still not the right way, but at least feels better.) But I feel good that it feels gross, that implies I have some learned instincts!

I still don’t really get the difference between @State, @StateObject, and @Binding.

The use of enums all over the place is interesting.

Text(ingredient)

.padding(5)

.foregroundColor(.secondary) // note the .secondary enum

.background(Color.secondary.opacity(0.2))

.cornerRadius(5)

I think I like it, re: the earlier point about how I like readability and explicitness. That said, being new to SwiftUI and iOS frameworks, I have no intuition or knowledge about what those enums will be and where they’ll show up (though Xcode is tolerable for code completion suggestions on that front.)

And overall—aforementioned fact that my brain works in imperative aside—I think SwiftUI looks like a fantastic way to get from zero -> functional in terms of design, and definitely faster than any of my previous half-hearted attempts over the past decade to learn UIKit + MVC patterns.

It seems much much much more difficult to get from functional to good (let alone great) unless you are committed to making your app just look like an Apple sample app. I’ve heard through podcasts with real iOS developers that there are some hard and fast limits to things you can do with SwiftUI, and it’s not fully-featured enough for a complex production app without having to dip into UIKit, but I haven’t experienced that (outside of some file handling/VisionKit stuff, but nothing actually UI related.)

However, my app is very simple, and has no custom style yet, so I’m a terrible test case!

Also lol I have no idea how to do tests in Swift or iOS or SwiftUI. So I didn’t!

AI (as a pair programmer)

I ended up using AI a lot in two different ways on this mini project: one is as a pair programmer (/fancy google) to help guide and build stuff, which I’ll talk about here!

I started playing around with ChatGPT et al probably 3 months ago, and started (in my work context) using Copilot autocomplete and Amazon Q a bit for VSCode. For this, I’m working directly in Xcode, so I’m not accessing Copilot or Q (plus I think my Q access is through my work account.) So in this case, I’ve mostly been using the free version ChatGPT with a bit of a test of Claude, and using their chat interfaces.

Also, in a work context, I’m mostly working on things I understand pretty well at this point (using Python to write endpoints for FastAPI and Django apps) so I fall out to ChatGPT et al about as often as I would’ve fallen out to Google (and even still, I’ll usually send the same query to vanilla google and ChatGPT/Q.)

In this case, I’m new to iOS and Swift! So I used ChatGPT a ton!

As I’ve compared notes with coworkers, I’ve found this use of AI chat tools for coding to be most useful in contexts where:

- I already know how to do a thing, I just don’t want to do it manually (“convert this if/else block into a switch statement” or “fill out this enum” or the equivalent)

- I already know how to do a thing in another context, but don’t know how to do it here (“What’s the Django equivalent of some SQL statement?” or “What’s the mongoengine equivalent of some Django query?”)

- I know what I want done but don’t know how to do it, and I don’t care (or can’t tell) if the results are good

I ran into a couple cases of the first two (“How do I format the string for a double without any decimal points in swift?” or “Make this codable (a big class that I don’t want to write out the coding keys for).” But most of this project has involved the 3rd: I have no compunctions, cares, or even ability to tell whether I’m writing good Swift, I just want to write working Swift!

To that end, a key element of #3 is that it needs to be something I can verify immediately, which is where Swift being a compiled language is nice; it’ll crash out at the build phase when (and yes when, not if, lol) ChatGPT gets something wrong. I generally find then if I just give ChatGPT the error string, it’ll figure it out. Whether it figures out the right or best solution, I can’t tell, but usually gets to working.

In contrast, I find I cannot really use ChatGPT et al for any sizable amount of Python code because I have too high standards for any Python code that goes out with my name on the commit. And it is frequently (again, to my style preferences) bad. In particular, it’s overly verbose and overly twisty in its logic and control flow. The “AIs are your army of junior programmers” quip seems to hold true, because for Python I do think it bears a striking similarity to the work I would’ve done as a junior developer. Which I guess same as above, where this has been an ego boost proving to myself I do have some legitimate programming instincts, I now get the opportunity to cringe at the equivalent of what I would’ve written years ago. Or, the opportunity to cringe at the Swift I am currently writing, because I assume it is bad and amateurish and I don’t care!

Similarly, I am not a good front-end developer, and so I like using ChatGPT at work for simple front-ends to internal tools. It is clunky, bad, not scalable, but:

- I can’t immediately tell like I can with Python, so it doesn’t offend me in the same way, and

- it’s fair game for internal tools where working > good. Unacceptable for actual customer-facing code, but fit for purpose there.

So yeah, overall ChatGPT has been a helpful companion as an on-demand Swift/SwiftUI/iOS tutor.

I’ve also re-confirmed in this experience that as I get older I’ve lost the patience to learn via working through a sample project; I’ve tried for years off and on learning Swift and iOS via tutorials and sample projects, and I always get bored because it’s not solving a problem I actually care about solving. Whereas here I feel like I’ve learned a lot, and I’ve had the motivation to continue because I want to make my own thing better.

To that, some of Apple’s instructional material has been very useful, and I got the basic UI shell running by following an unrelated Apple tutorial, but picking and choosing which lessons to follow and swapping in all of their sample app details (in their case a Scrum Meeting timer) with stuff for my cocktail app. Once I got past that point, that’s where ChatGPT became the most useful, again as more or less an on-demand tutor I could ask for instructions on specific challenges as I encountered them.

To that end, I do feel like I learned a reasonable amount, and that’s probably because I went step by step. If I had asked ChatGPT for a whole app skeleton, then nothing would stick, but by asking for each step individually I got the opportunity to actually learn how, for example, PhotoPicker works.

But it was also dumb and bad

That said, a major gigantic whiff was using ChatGPT for any instructions on how to download, convert, and embed a small LLM into my app bundle for use in the image -> recipe parsing.

I spent probably 5-6 hours going in circles as ChatGPT gave me instructions for things that would fail, hallucinated libraries that didn’t exist, gave me instructions for libraries that had breaking changes a year ago, and so on. I tried Claude as an alternative to see if it would be better, and it gave me different guidance and suggestions, but equally wrong ones.

Eventually I had enough examples to figure out the right terms to google and find some Apple documentation that lead to some sample code I was able to read and copy out that eventually worked well enough. But what a total fail on the AI assistant front.

I have some sympathy because LLM tools (currently) are specifically bad at following fast-moving things because they don’t “learn” much after their training cutoff, and AI development is very very fast-moving. So this is a worst-case scenario for the current models. That said, it was massively frustrating, especially in contrast to what a (relatively) useful tool they were on the iOS and SwiftUI front.

AI (as a thing to include in the app)

A lot of talk in the last two weeks in the Apple press has been about how Apple is behind the eight-ball on AI in general, and specifically with how AI integrates into Siri, with the AI-powered Siri features being disastrously behind schedule compared to their public announcements last year.

While that’s damning in terms of highlighting dysfunction between Apple’s marketing and engineering teams, to me the way bigger black mark is that Apple has (kinda by accident) built phones and devices with chips that are incredibly well suited to running AI models locally, and yet it is so difficult to figure out how to do that, and then actually do it in any sort of practical way.

As much as I’m frustrated at ChatGPT and Claude for leading me in circles around how to do this, if I take a step back I’m more frustrated at Apple. As it stands now it sure seems like LLMs themselves veer towards being commodities, where it’s pretty easy for other model developers to catch up to the state of the art (in part because Facebook and Deepseek are very invested in making them open-source commodities.) Apple, however, has a lead in chip performance for devices, millions of devices already out in the world, a huge amount of distribution and platform ownership advantages, and a brand that’s all about privacy and security.

So to my outside eye it looks like the natural “unfair advantage” they could pursue is leveraging their killer hardware, distribution quasi-monopolies, and brand promise to say to developers that iOS is the best platform for them to deploy local AI. I find it hard to believe that Apple will be able to make a better integrated LLM assistant than, say, Google, but I find it very easy to believe that Apple could make a much more attractive developer platform for integrating AI into 3rd party apps than Google ever could.

And yet!!!!!!! How is there not an easy-to-find developer doc saying “Here’s how you do it!”? How is it that I was only able to figure out a way to do it by eventually finding my way to a library that is clearly just a research project, then to the example repo linked from that library (which the example repo for some reason had the actual packages I needed to install), and then to sample code that actually showed me what to do?

And that isn’t even what I actually I wanted to do: my goal was to download a model to my Mac, get it into whatever format Apple needed, bundle it into my application bundle, and ship the model with the application. Ideally I’d love to fine tune it or quantize it or something along the way. Instead the only approach that ended up working was using the Apple research library to download a version of llama on app startup (which takes a about 90-120 seconds, and the app needs to be open in the foreground the whole time) and then store that in what I believe is temporary storage on the device. And of course, the app sometimes crashes because it uses too much memory trying to load this far-too-big-for-purpose full-fledged LLM (and I assume would fully crash out if I wasn’t using an iPhone 15 Pro Max.)

I fully agree with John Siracusa in this week’s episode of Upgrade that the real solution Apple should do is have system APIs that plug into central Apple-provided AI and LLM capabilities that run locally, rather than requiring every app ship a model with its app bundle. But until you do that, it should at least be well-documented how to do the alternative!!!!!!

As it stands now, based on my experience with this little demo app, the best way for an iOS developer to integrate AI into their products is absolutely to get an OpenAI api key and send every request over the network. The fact that that’s the best solution when Apple has the best consumer-grade AI hardware and, again, a brand promise focused on privacy and security is ludicrous.

And especially when it stands in contrast to Apple’s earlier AI features (branded under “ML” lol.) It was 6 hours of work and a bunch of custom code to get to a pseudo-solution for my problem of “How do I parse the freeform text of a recipe into a structured Recipe object?.”

What about “how do I read the text from a user’s photo?” 10 minutes of good Apple documentation and 30 lines of code, mostly just error handling.

import Vision

import UIKit

func recognizeText(from image: UIImage, completion: @escaping (String?) -> Void) {

guard let cgImage = image.cgImage else {

completion(nil)

return

}

let requestHandler = VNImageRequestHandler(cgImage: cgImage, options: [:])

let request = VNRecognizeTextRequest { request, error in

guard let results = request.results as? [VNRecognizedTextObservation] else {

completion(nil)

return

}

let recognizedText = results.compactMap { $0.topCandidates(1).first?.string }.joined(separator: "\n")

completion(recognizedText)

}

do {

try requestHandler.perform([request])

} catch {

print("Error performing OCR: \(error)")

completion(nil)

}

}

“Ask an LLM to pull some structured text out of this freeform text” and “Ask an ML model to pull text out of this photo” are similar questions that should have similar APIs, and I have less than zero complaints about how Apple handles the latter. It felt like magic to be able to add that feature in 10 minutes of work! And it’s absolutely to your benefit as a developer platform to give people that magical feeling as often as you can.

I did not have that feeling working with the LLM.

If this does ever become a real app I release, I’m absolutely pivoting it to the “Just use OpenAI (or Claude or whatever) over the network” approach.

Anyway, rant aside, what I’m actually doing is using MLX to load llama 3.2, certainly overkill for my case, but of the models in the MLX model registry only that, Gemma 2, and Phi 3.5 had anything close to a reasonable balance of workable results and not immediately crashing out due to memory or other installation issues. I tried loading it at app startup and tried loading only when I’m on the Edit view where it can be triggered, and the latter was way worse for performance (basically killing the app if I loaded two Edit views within the same session.) This does mean my app uses way too much memory for normal “just browsing recipes” use, again why if this ever becomes a real app I’ll switch to offloading the LLM stuff to a 3rd party service.

I found it worked much better both in terms of real performance and perceived performance to split the recipe parsing into 3 discrete steps: one to grab the name, one to grab the ingredients, one to grab the instructions (and show a progress bar as it goes through each step) vs trying to fetch the full structured JSON of a recipe.

import Foundation

import MLX

import MLXLLM

import MLXLMCommon

class RecipeParser: ObservableObject {

private var modelContainer: ModelContainer?

@Published var loaded: Bool = false

let maxTokens = 1000

init() async throws {

loaded = false

print("Initializing parser")

let modelConfiguration = ModelRegistry.llama3_2_3B_4bit

do {

self.modelContainer = try await LLMModelFactory.shared.loadContainer(configuration: modelConfiguration)

loaded = true

print("Model loaded")

} catch {

print("Error loading model")

}

}

private func generate(systemPrompt: String, prompt: String) async throws -> String {

if loaded {

let result = try await modelContainer!.perform { context in

let input = try await context.processor.prepare(

input: .init(

messages: [

["role": "system", "content": systemPrompt],

["role": "user", "content": prompt],

]))

return try MLXLMCommon.generate(

input: input, parameters: GenerateParameters(), context: context

) { tokens in

//print(context.tokenizer.decode(tokens: tokens))

if tokens.count >= maxTokens {

return .stop

} else {

return .more

}

}

}

return result.output

}

return ""

}

func parseName(recipeText: String) async throws -> String {

let systemPrompt = """

You are a simple tool to help parse freeform cocktail recipe text into something more structured.

You will be given text of a recipe. Extract the name of the recipe and return that. Return only the recipe name

and no other text. Do not return any other commentary other than the recipe name.

"""

return try await generate(systemPrompt: systemPrompt, prompt: recipeText)

}

func parseInstructions(recipeText: String) async throws -> String {

let systemPrompt = """

You are a simple tool to help parse freeform cocktail recipe text into something more structured.

You will be given text of a recipe. Extract the instructions of the recipe and return that. Return only the cocktail instructions

and no other text.

As an example "01:52

76),

OLD FASHIONED

2 oz BOURBON

¼ oz SIMPLE SYRUP (1:1)

1 DASH ANGOSTURA BITTERS

1 DASH ORANGE BITTERS

Stir the bourbon, simple syrup, and bitters in a mixing glass with ice. Strain into a rocks glass with one large ice cube. Garnish with an orange peel.

EDIT

OZ

ML

QTY: 1" should return "Stir the bourbon, simple syrup, and bitters in a mixing glass with ice. Strain into a rocks glass with one large ice cube. Garnish with an orange peel."

and "BLINKER

EDIT

42 oz GRENADINE

1 oz GRAPEFRUIT JUICE

2 oz RYE

Shake and strain" should return "Shake and strain"

Do not return any commentary or explanation other than what is in the provided text.

If you can't find any instructions to parse, return nothing.

"""

return try await generate(systemPrompt: systemPrompt, prompt: recipeText)

}

func parseIngredients(recipeText: String) async throws -> [Ingredient] {

let systemPrompt = """

You are a simple tool to help parse freeform cocktail recipe text into something more structured.

You will be given text of a recipe. Extract the ingredients of the recipe and return them in json of the form

[

{

"name": string,

"unit": string,

"amount": float

}

]

Make sure to convert any fractional amounts to a valid float. For example, an ingredient like "1/2 oz of vodka" should be returned as

{

"name": "Vodka",

"unit": "oz",

"amount": 0.5

}

Return only this array of ingredients and make sure it is valid JSON with no comments. Do not provide any additional commentary.

If you can't parse, return []

"""

var ingredients = [Ingredient]()

let rawIngredientString = try await generate(systemPrompt: systemPrompt, prompt: recipeText)

let trimmedIngredientString = extractJSONArray(from: rawIngredientString)

let dataString = "\(trimmedIngredientString)".data(using: .utf8)

let decoder = JSONDecoder()

do {

let partialIngredients: [PartialIngredient] = try decoder.decode([PartialIngredient].self, from: dataString!)

for partialIngredient in partialIngredients {

let newIngredient = Ingredient(name: partialIngredient.name, unit: partialIngredient.unit, amount: partialIngredient.amount)

ingredients.append(newIngredient)

}

} catch let decodingError as DecodingError {

print(decodingError)

}

return ingredients

}

private func extractJSONArray(from text: String) -> String {

guard let startIndex = text.firstIndex(of: "["),

let endIndex = text.lastIndex(of: "]") else {

return "[]"

}

return String(text[startIndex...endIndex])

}

}

I also found a little bit of prompt engineering went a long way, at least with llama; Phi 3.5 worked well out of the gate, but was more resource intensive than llama, whereas llama worked well for parsing name and instructions, but parsing out ingredients was sketchier until I added examples to the system prompt.

Anyway, these are my learnings turned rant. Overall neat little experiment, I’m sure it’ll be like 6 years before I try to make another iOS app lol.

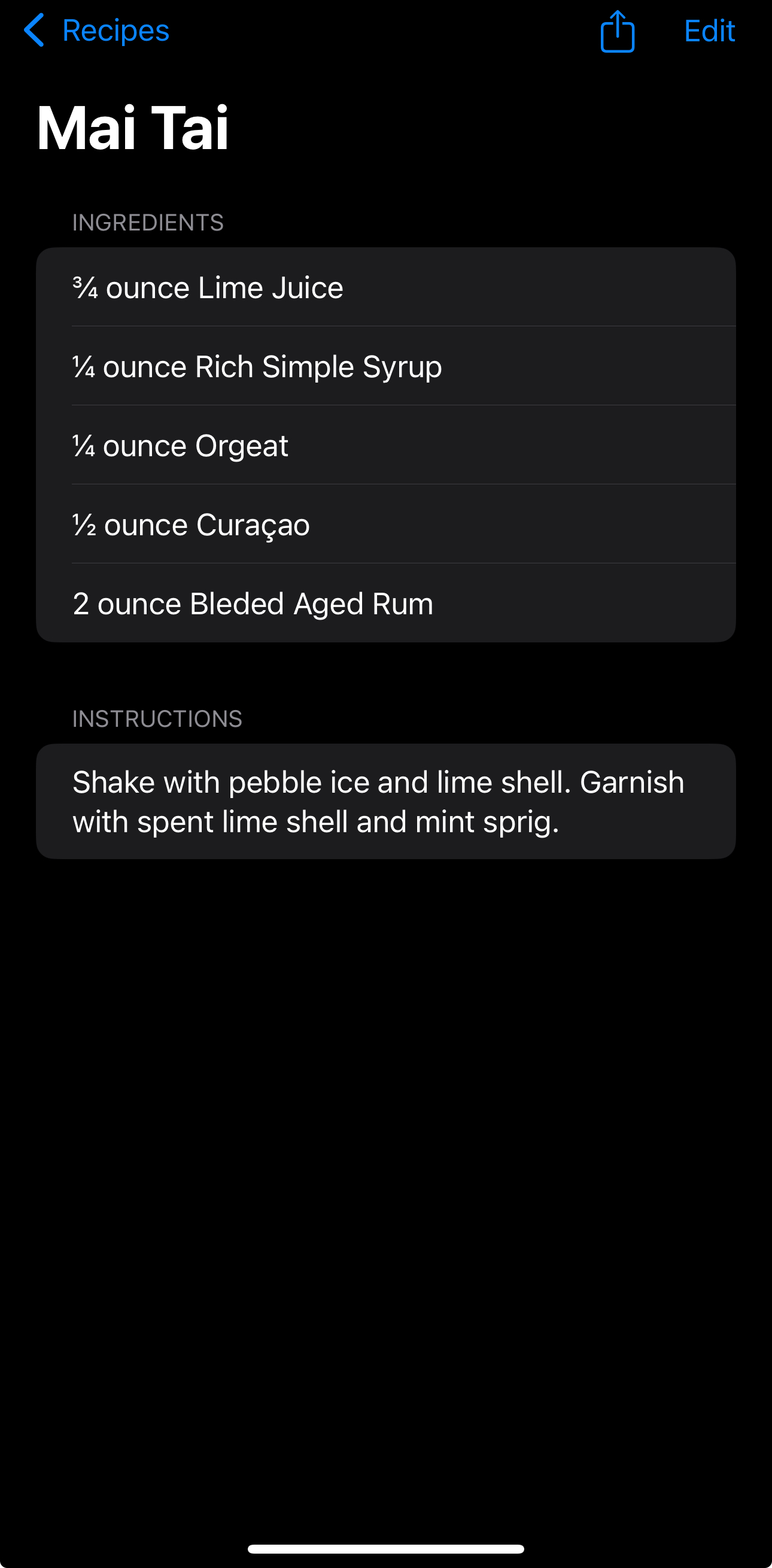

As a reward for making it through this tome, here is what I think is the best cocktail recipe I ever created(2):

(1): also known in the tech industry as being “on-call”

(2): So called because it’s a Last Word formula (equal parts base spirit, sweet liqueur, herbal liqueur, and acid) using the passionfruit and Campari of the Lost Lake cocktail, and because it’s funny to name it after a Jurassic Park sequel.